AlphaSpace: A Step Closer to Having Clumsyless Robots

Large Language Models (LLMs) handle text with impressive fluency, but ask one to guide a robot in stacking blocks and see what happens. Bridging the gap between language understanding and physical, spatial action still poses a significant hurdle. To address this, our research team at Menlo—Alan Dao (Gia Tuan Dao), Dinh Bach Vu, and Bui Quang Huy—developed AlphaSpace, a methodology aimed at teaching existing models the nuances of navigating and manipulating objectswithin three-dimensional space via a specialized tokenization approach.

What is AlphaSpace?

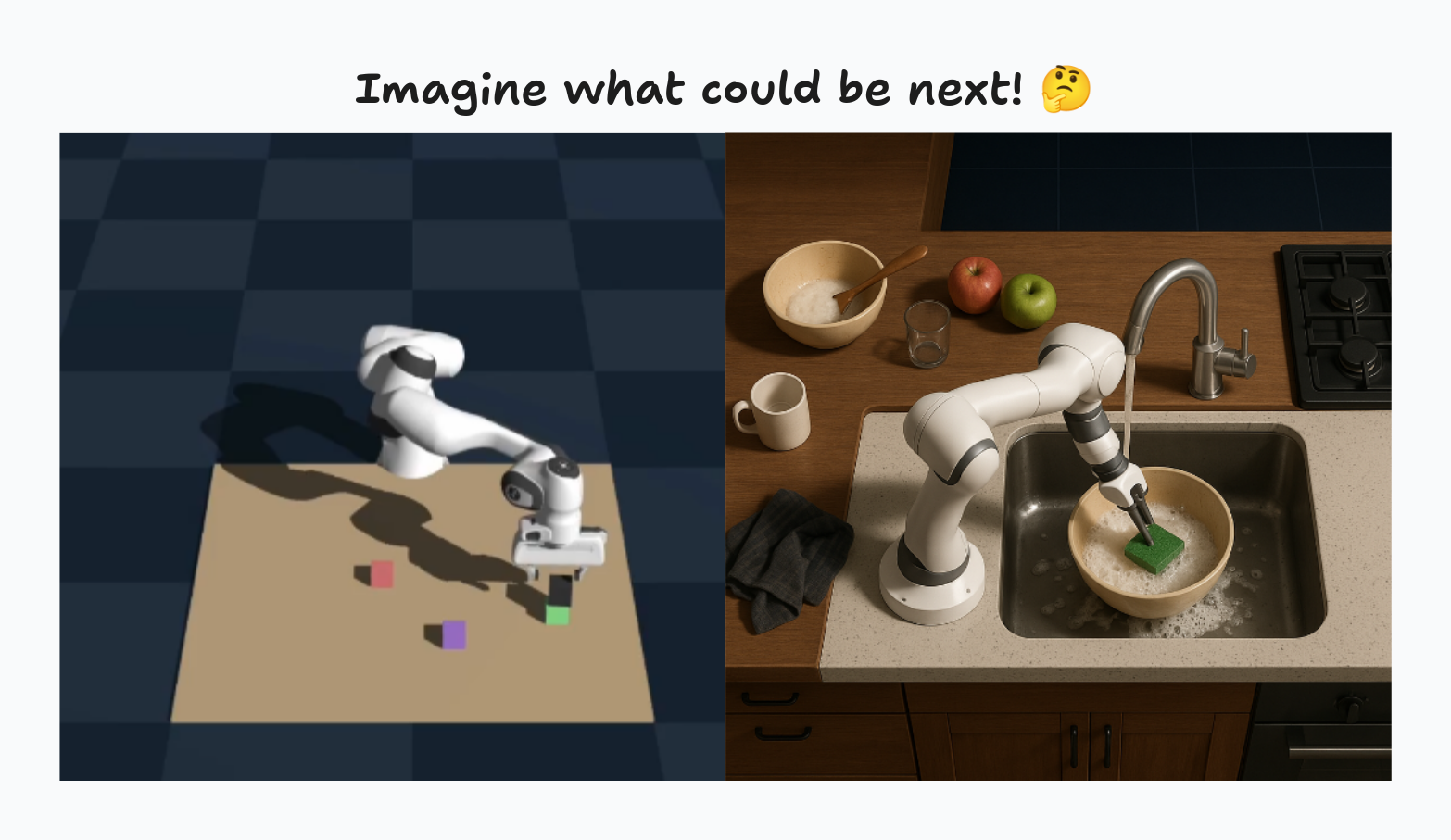

Think of AlphaSpace as a spatial reasoning bootcamp for LLMs. It’s a set of techniques to help models understand and manipulate objects in three dimensions. The goal isn’t to build a new model from scratch, but to enhance the ones we have while focusing on practical tasks like picking up, moving, or stacking objects — the kinds of skills that will be crucial for the robots that one day will tidy our homes or assemble our ikea furniture.

This approach moves beyond understanding language as we or LLMs do, it sits between symbolic representation and physical action, enabling models to operate outside of digital constraints. We want to enable the translation of a command like “put the red cube on the blue one” (as shown below) into a series of precise movements in a real (or simulated) space.

How Does It Work?

AlphaSpace’s secret sauce is a technique called semantic tokenization, which translates the details of 3D space—an object’s shape, color, position (x, y coordinates), and even its height (z-coordinate)—into a structured text format that LLMs can digest. Imagine describing a “red apple at position (30, 40) with height 5” entirely through these specialized tokens. The model doesn’t “see” the apple, but it can reason about its properties and location based on the description provided.

This tokenization is hierarchical, and captures both broad locations (coarse grid) and precise spots (fine grid). This structured approach allows the LLM to “see” through text, bypassing the need for complex visual processing systems like traditional vision-language models often use.

Once the spatial scene is tokenized, the LLM undergoes a two-step training process, building on the foundation laid by our earlier work on AlphaMaze for maze navigation. First, Supervised Fine-Tuning (SFT) teaches the model the basics using a dataset of examples containing tokenized scenes paired with the correct sequence of actions. Then, Group Relative Policy Optimization (GRPO), a reinforcement learning technique, refines the model’s decision-making. This stage is where the model learns from trial and error, from within the safety of the simulated environment, to optimize its strategy for better performance and enhanced spatial reasoning. Although GRPO was key in AlphaMaze, the current AlphaSpace implementation primarily focused on SFT using synthetic reasoning data.

Performance and Impact

Our researchers pitted AlphaSpace against other models using EmbodiedBench, a standard test for AI on handling physical tasks. The results were quite promising. AlphaSpace aced 10 out of 12 picking tasks and managed 6 out of 12 stacking tasks, the performance of other models can be seen on the table below. This performance boost highlights the advantage of AlphaSpace’s structured, semantics-based approach compared to models relying more heavily on general vision-language understanding, which seem to struggle more with the nitty-gritty of precise manipulation.

| Model | Picking | Stacking | Total Accuracy (%) |

|---|---|---|---|

| AlphaSpace (Ours) | 10/12 | 6/12 | 66.67 |

| GPT-4o | 6/12 | 3/12 | 37.5 |

| Claude 3.5 Sonnet | 5/12 | 2/12 | 29.17 |

We think AlphaSpace is a tangible step towards models that can meaningfully interact with the physical world. The potential applications are broad, from more capable domestic robots and smarter warehouse automation to more intuitive interfaces for augmented reality or even more autonomous video game characters (imagine an unbounded NPC 🤔).

The success this experiment underscores the power of symbolic reasoning and structured spatial representation for specific manipulation tasks, offering a different, potentially more efficient path than compute-heavy vision-centric models for certain applications. It suggests that sometimes, translating the world into the right “language” is more effective than trying to make the model see it directly.

Looking Ahead

While the benchmark results are encouraging, AlphaSpace is still learning to walk, let alone run, in the real world. The current methodology shines in controlled, simulated environments with clearly defined objects and tasks. Adapting it to the chaos of dynamic, unpredictable real-world settings—where objects move, lighting changes, and unexpected obstacles appear is the next major hurdle.

Research is ongoing to make AlphaSpace more robust and adaptable. Exploring hybrid models that combine AlphaSpace’s symbolic strengths with lightweight visual processing, or integrating reinforcement learning for real-time adaptation, are promising directions. Scaling its capabilities to handle more complex sequences of actions, multi-robot collaboration, or tasks involving object rotation also remains future work.

Furthermore, the initial project scope was narrowed, focusing on only two types of manipulation tasks (picking and stacking) from the benchmark suite, leaving other spatial challenges unevaluated. More comprehensive testing is needed to fully map its strengths and weaknesses.

Conclusion

AlphaSpace isn’t grabbing the full potential of LLM applications in the robotics and AI space yet, but it is a step in the right direction. As a methodology, it shows that by cleverly translating 3D space into a token LLMs understand and combining this with targeted training, we can imbue these models with surprisingly effective spatial reasoning skills for manipulation. Its strong benchmark performance, particularly against leading generalist models, highlights the potential of specialized, structure-aware approaches. By focusing on symbolic representation and reasoning, AlphaSpace offers a lightweight yet powerful pathway towards models that can not only talk the talk but also walk the walk—or at least, stack the block correctly. The journey to fully embodied AI is long, but AlphaSpace offers a helping hand.

Here are some resources additional resouces to learn more about AlphaSpace:

Acknowledgements

We would like to acknowledge the creators of the DeepSeek and Qwen models for providing the foundation for our work. We also thank the authors of the EmbodiedBench benchmark, which was valuable for evaluating AlphaSpace’s capabilities.

Open Call

We’re calling on researchers to experiment & build-in-public with us. Join the #research channel in Discord.

We believe that collaborative, open research can accelerate progress in this exciting field. Whether you’re an experienced researcher or an enthusiastic newcomer, your contribution could be valuable.