Research

🌐 Vision: Intelligence as a Continuously Expanding Network

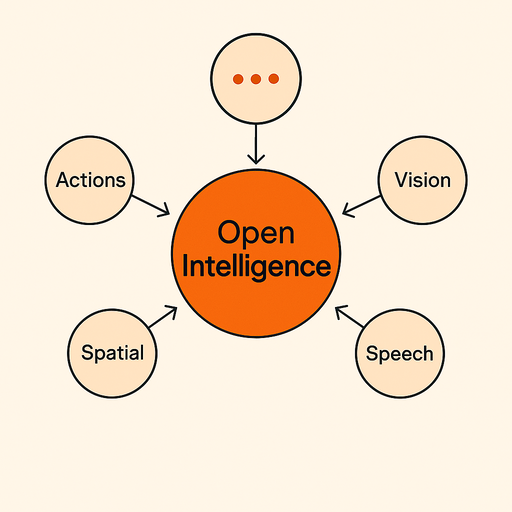

We envision Open Intelligence not as a static system with fixed inputs and outputs, but rather as a dynamic, evolving network capable of integrating diverse forms of perception, reasoning, and interaction. Each new modality—whether visual, auditory, spatial, or yet-to-be-defined—should be able to join the framework organically, enriching the whole while benefiting from its connections.

Rather than defining intelligence by a limited set of preordained capabilities, we aim to construct a platform where novel models can emerge and co-evolve over time, unlocking unforeseen generalization abilities and creative potential.

⚙️ Core Pillars of Our Intelligence Framework

1. Foundational Modalities

These represent the primary building blocks of our current model and are essential for real-world competency:

- Vision: Robust computer vision enabling recognition of objects, scenes, and dynamics in physical environments.

- Speech: Advanced audio processing and natural language generation, supporting fluid dialogue and instruction following.

- Spatial Intelligence: Geometric and topological modeling of space, motion, and environmental structure, critical for navigation and planning.

2. Action & Agency

True intelligence is not passive—it must act and adapt. We focus on tightly coupling perception with actionable outcomes through:

- Embodied Interfaces: Whether robotic actuators, software agents, or digital avatars, these interfaces allow the system to interact meaningfully with both virtual and physical environments. For example, browser automation agents can navigate web interfaces, fill forms, and extract information, demonstrating how AI can operate in digital spaces as effectively as physical ones.

- Control Policies: Learning adaptive strategies for goal-directed behavior, informed by multimodal inputs and contextual awareness. See our work in AlphaMaze for RL-based spatial reasoning and AlphaSpace for RL-powered robotics planning.

3. Extensible Architecture for New Modalities

Our long-term advantage lies in our ability to grow beyond today’s known modalities. The world is represented in a much richer context than simply text or images. Here are some unique modalities that demonstrate the breadth of intelligence representation:

- Action & Motion: Movement dynamics and temporal patterns

- Spatial & Environmental: Room layouts and object relationships

- Physical Sensing: Tactile feedback, thermal patterns, and chemical interactions

This diversity of modalities highlights why any true Intelligence system must be designed for continuous expansion and integration of new forms of perception and understanding.

🔄 Strategic Approach: Lean, Modular, and Principles-Driven

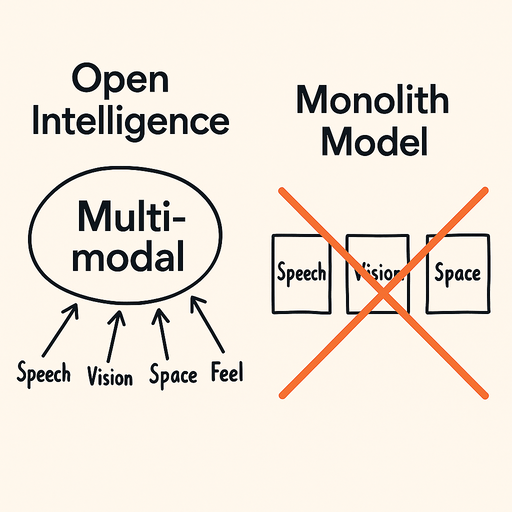

1. Why Monolithic Architectures Are Not Feasible and Why Modality Expansion Is Important

Over the past few years, the most successful attempts at overcoming challenges in General Intelligence have focused on growing the modality of pretrained models. The core argument is that we need to leverage “pre-train” data to enhance the model’s ability to generalize beyond its original domain.

For many years, research in AI has been hindered by the extreme lack of data sources. Many startups and companies have been founded—and have failed—simply to “make a tool” for data collection.

We strongly believe that Open, General Intelligence should focus on expanding modalities and utilizing pretrained efforts. Only in this way can Open Intelligence truly be open, because efforts will build upon each other rather than being isolated, one-shot attempts.

2. Iterative Exploration with Resource Constraints

To stay lean, we prioritize research that maximizes value from minimal inputs, i.e.,

- Sprint Goals: Focus on questions like:

- Can a decoder-only model reason over vision-based representations through RL? See our work in AlphaMaze for an example.

- Success Criteria: Evidence that the model can perform well on that question + edge cases.

📈 Outlook: Growing Toward General Intelligence

By designing intelligence as a growing constellation of interdependent modalities, we transition from rigid, predefined systems to more flexible, adaptive, and ultimately more powerful solutions.

In this future:

- A system initially trained on vision and speech might later absorb tactile feedback to improve object manipulation.

- An agent deployed in logistics could reuse spatial reasoning models for urban planning or robotics.

- Unexpected cross-modal insights will drive innovation in domains we haven’t yet imagined.

Our R&D effort isn’t about ticking off familiar modalities; it’s about building the infrastructure to support perpetual evolution. In doing so, we position ourselves not only to meet today’s challenges but to lead the next wave of breakthroughs in open-ended multimodal intelligence.