Enabling GPUDirect P2P in OpenStack VMs

It started with a simple question: “Can we test NVIDIA’s GPUDirect P2P to see how much it helps with LLM inference?”

What seemed like an afternoon task turned into a multi-day journey through QEMU internals, libvirt XML quirks, and eventually, a wrapper script that intercepts hypervisor calls. The kind of problem where every layer of abstraction fights you, until you find the one place where you can actually make a change.

The solution ended up being surprisingly simple. But getting there required understanding why the obvious approaches don’t work.

With this solution, we achieved up to 137% higher bidirectional bandwidth and 97% lower GPU-to-GPU latency in OpenStack VMs.

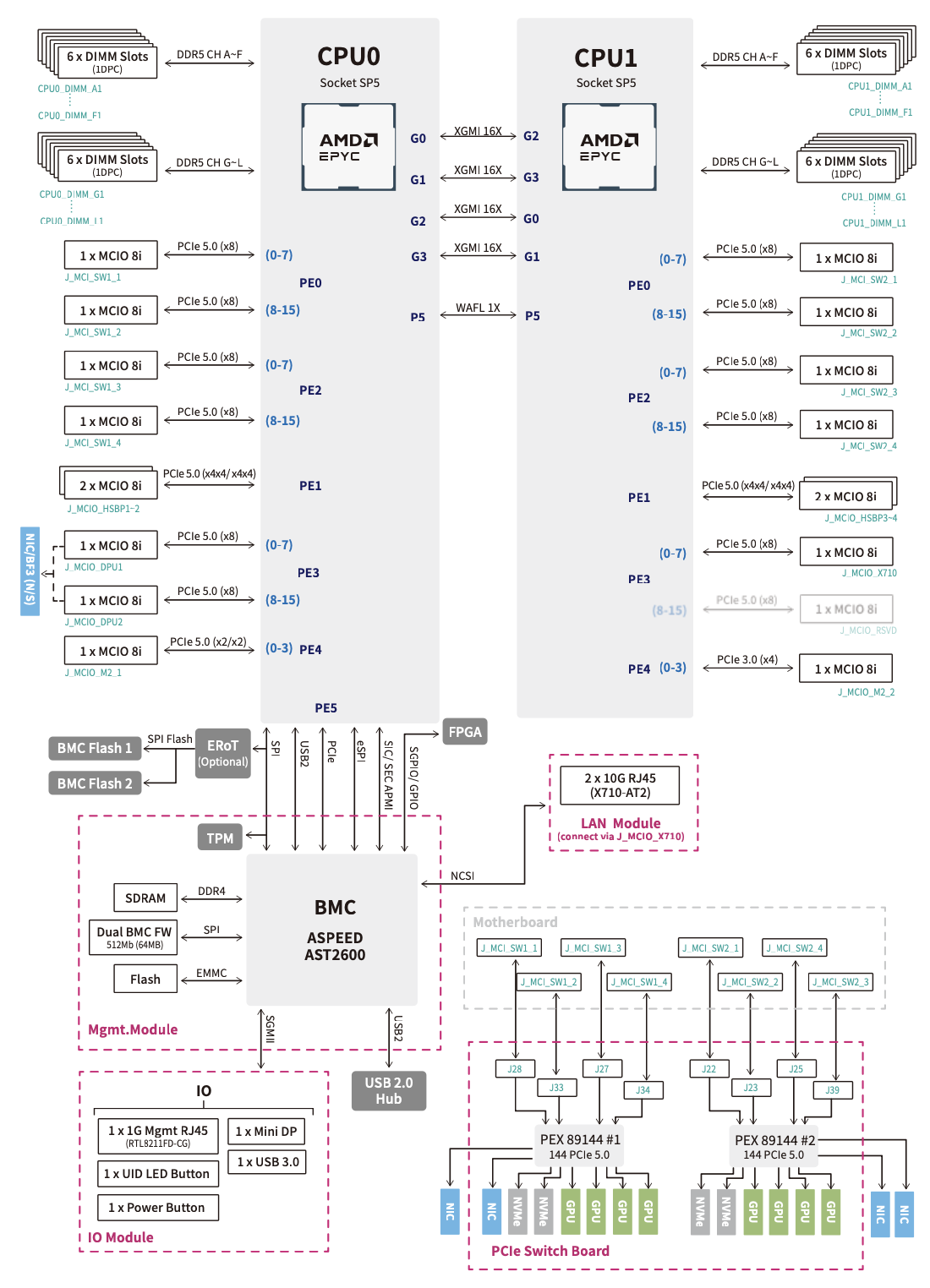

Test hardware: MSI CG480-S6053 with 8x RTX PRO 6000 Blackwell GPUs

Test hardware: MSI CG480-S6053 with 8x RTX PRO 6000 Blackwell GPUs

Why GPU P2P Is Disabled in OpenStack VMs

Standard OpenStack PCIe passthrough provides VMs direct access to physical GPUs, but GPU-to-GPU communication is disabled by default. Running nvidia-smi topo -p2p r inside a VM shows every GPU pair as NS (Not Supported):

GPU0 GPU1 GPU2 GPU3 ...

GPU0 X NS NS NS

GPU1 NS X NS NS

GPU2 NS NS X NS Without P2P, all inter-GPU data transfers route through system RAM and the CPU. This is a significant bottleneck for multi-GPU workloads like distributed training and inference.

Verifying GPUDirect P2P Support on Bare Metal

First, confirm P2P works on bare metal. Install NVIDIA drivers directly on the host and run the topology check:

nvidia-smi topo -p2p r

GPU0 GPU1 GPU2 GPU3 ...

GPU0 X OK OK OK

GPU1 OK X OK OK All OK. The hardware supports P2P. NVIDIA’s P2P bandwidth test quantifies the difference:

| Metric | P2P Disabled | P2P Enabled | Improvement |

|---|---|---|---|

| Unidirectional Bandwidth | ~40 GB/s | ~53 GB/s | +32% |

| Bidirectional Bandwidth | ~43 GB/s | ~102 GB/s | +137% |

| GPU-to-GPU Latency | ~14μs | ~0.45μs | 97% faster |

The goal is to preserve these gains inside VMs.

QEMU x-nv-gpudirect-clique Parameter

QEMU supports a parameter called x-nv-gpudirect-clique that groups passthrough GPUs into “cliques.” GPUs assigned to the same clique can perform P2P transfers. This feature was discussed in a 2017 QEMU mailing list thread with additional reports on Reddit’s /r/VFIO .

Syntax:

-device vfio-pci,host=05:00.0,x-nv-gpudirect-clique=0 \

-device vfio-pci,host=06:00.0,x-nv-gpudirect-clique=0GPUs with the same clique ID can communicate directly. The challenge is getting this parameter into OpenStack-managed VMs.

Why Libvirt XML Configuration Fails

The obvious approach is to modify libvirt’s domain XML to inject the parameter.

<hostdev mode='subsystem' type='pci'>

<source>

<address domain='0x0000' bus='0x05' slot='0x00' function='0x0'/>

</source>

<qemu:commandline>

<qemu:arg value='-device'/>

<qemu:arg value='vfio-pci,host=05:00.0,x-nv-gpudirect-clique=0'/>

</qemu:commandline>

</hostdev>Two problems make this approach impractical:

- Libvirt sanitizes XML. Custom parameters get reformatted or removed.

- OpenStack regenerates XML. Nova regenerates the entire domain XML from its templates on every VM launch.

This approach works for one-off testing but not for production deployments.

Solution: QEMU Wrapper Script for GPU P2P

The call chain is: OpenStack → Nova → libvirt → QEMU

At the end, something executes qemu-system-x86_64 with all parameters. Verifiable via:

ps -xau | grep qemu-system-x86_64The solution is to replace the QEMU binary with a wrapper script that:

- Intercepts all original arguments

- Identifies GPU passthrough devices

- Injects

x-nv-gpudirect-cliquebased on PCIe topology - Executes the real QEMU with modified arguments

Before deploying, run nvidia-smi topo -p2p r on your host to check P2P support. GPUs showing OK should share the same clique ID. GPUs showing NS need different cliques or shouldn’t use P2P.

Here’s the complete wrapper script:

#!/bin/bash

#

# QEMU Wrapper Script for NVIDIA GPU P2P (GPUDirect) Support

#

# How it works:

# 1. Intercepts all QEMU calls from libvirt/Nova

# 2. Scans for GPU passthrough devices (-device vfio-pci)

# 3. Injects x-nv-gpudirect-clique parameter based on PCIe topology

# 4. Executes the real QEMU with modified arguments

REAL_QEMU="/usr/bin/qemu-system-x86_64.real"

LOG_FILE="/var/log/qemu-p2p-wrapper.log"

ENABLE_LOGGING="${QEMU_P2P_WRAPPER_LOG:-0}"

# ============================================================

# GPU CLIQUE MAPPING - CUSTOMIZE THIS FOR YOUR HARDWARE

# ============================================================

# Run `nvidia-smi topo -p2p r` to find your GPU PCIe addresses

# GPUs that show "OK" for P2P should share the same clique ID

# Our 8 GPUs all support full P2P mesh → all in clique 0

declare -A GPU_CLIQUE_MAP=(

["0000:05:00.0"]="0"

["0000:06:00.0"]="0"

["0000:76:00.0"]="0"

["0000:77:00.0"]="0"

["0000:85:00.0"]="0"

["0000:86:00.0"]="0"

["0000:f4:00.0"]="0"

["0000:f5:00.0"]="0"

)

# ============================================================

# HELPER FUNCTIONS

# ============================================================

log_message() {

if [[ "$ENABLE_LOGGING" == "1" ]]; then

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1" >> "$LOG_FILE"

fi

}

get_clique_id() {

local pcie_addr="$1"

# Normalize address format

if [[ ! "$pcie_addr" =~ ^0000: ]]; then

pcie_addr="0000:$pcie_addr"

fi

if [[ -v GPU_CLIQUE_MAP["$pcie_addr"] ]]; then

echo "${GPU_CLIQUE_MAP[$pcie_addr]}"

else

echo ""

fi

}

modify_vfio_device() {

local device_json="$1"

# Only process vfio-pci devices (GPU passthrough)

if [[ "$device_json" =~ \"driver\":\"vfio-pci\" ]]; then

if [[ "$device_json" =~ \"host\":\"([0-9a-fA-F:\.]+)\" ]]; then

local pcie_addr="${BASH_REMATCH[1]}"

local clique_id=$(get_clique_id "$pcie_addr")

if [[ -n "$clique_id" ]]; then

log_message "Found GPU at $pcie_addr -> clique $clique_id"

# Inject clique parameter if not already present

if [[ ! "$device_json" =~ x-nv-gpudirect-clique ]]; then

device_json="${device_json%\}}},\"x-nv-gpudirect-clique\":$clique_id}"

log_message "Modified device JSON: $device_json"

fi

else

log_message "GPU at $pcie_addr not in clique map, skipping"

fi

fi

fi

echo "$device_json"

}

# ============================================================

# MAIN: Parse arguments and inject clique parameters

# ============================================================

log_message "=== QEMU P2P Wrapper started ==="

log_message "Original args: $*"

new_args=()

while [[ $# -gt 0 ]]; do

arg="$1"

case "$arg" in

-device)

shift

if [[ $# -gt 0 ]]; then

device_spec="$1"

# QEMU device specs come as JSON objects

if [[ "$device_spec" =~ ^\{.*\}$ ]]; then

modified_spec=$(modify_vfio_device "$device_spec")

new_args+=("-device" "$modified_spec")

else

new_args+=("-device" "$device_spec")

fi

else

new_args+=("-device")

fi

;;

*)

new_args+=("$arg")

;;

esac

shift

done

log_message "Modified args: ${new_args[*]}"

log_message "Executing: $REAL_QEMU ${new_args[*]}"

# Hand off to real QEMU

exec "$REAL_QEMU" "${new_args[@]}"Installing the QEMU Wrapper

# Backup original binary

sudo mv /usr/bin/qemu-system-x86_64 /usr/bin/qemu-system-x86_64.real

# Deploy wrapper (save the script above as qemu-wrapper.sh first)

sudo cp qemu-wrapper.sh /usr/bin/qemu-system-x86_64

sudo chmod +x /usr/bin/qemu-system-x86_64

# Restart services

sudo systemctl restart libvirtd nova-computeTo debug, set QEMU_P2P_WRAPPER_LOG=1 environment variable and check /var/log/qemu-p2p-wrapper.log to see which devices are being modified.

GPUDirect P2P Performance Results

After deploying the wrapper, topology check inside the VM:

GPU0 GPU1 GPU2 GPU3 ...

GPU0 X OK OK OK

GPU1 OK X OK OK All OK. P2P is enabled.

Bandwidth comparison:

| Metric | VM (No P2P) | VM (With P2P) | Bare Metal |

|---|---|---|---|

| Bidirectional Bandwidth | ~42-55 GB/s | ~54-102 GB/s | ~54-102 GB/s |

| P2P Connectivity | ❌ None | ✅ Full mesh | ✅ Full mesh |

VMs achieve up to 81% higher bidirectional bandwidth for closely connected GPU pairs.

Key Takeaways for GPU Virtualization

Bypass OpenStack and Libvirt Abstractions

Libvirt and OpenStack aren’t designed for this use case. Intercepting at the QEMU level is simpler than modifying higher-level components.

PCIe Topology Affects P2P Performance

Not all GPU pairs support P2P equally. Use nvidia-smi topo -m to check connection types:

- PIX (same switch) → best P2P, same clique

- NODE (different root complex) → possible P2P, test first

- SYS (different NUMA) → poor P2P, consider separate cliques

VM vs Bare Metal GPU Performance

Even with P2P enabled, VMs reach ~75-85% of bare metal bandwidth. For latency-critical workloads, bare metal remains preferable.

QEMU Wrapper Maintenance

The wrapper script must be reapplied after QEMU package updates. Include it in infrastructure-as-code and set up alerts for package changes.

What’s Next

There’s a broader lesson here about virtualization and AI infrastructure.

Cloud providers abstract away hardware details for good reasons. But AI workloads are different. They push hardware to its limits in ways that general-purpose abstractions weren’t designed for. Sometimes you need to reach through those abstractions and touch the metal.

We’re planning to run comprehensive LLM training benchmarks comparing single GPU, multi-GPU without P2P, and multi-GPU with P2P. Based on the bandwidth improvements we’re seeing, we expect 15-30% better training throughput for data-parallel workloads and 10-20% lower inference latency for model parallelism.

The wrapper script is a hack. It works, it’s maintainable, and it unlocks real performance. Sometimes that’s what matters.